Radiographic Testing can be summarized as interpreting the differential absorption of X-rays. But X-rays are a band of electromagnetic radiation that cannot be detected with human senses (sight, smell, taste, etc.) so, how do we interpret them?

Traditionally this has been done using photographic film. The film has an emulsion layer made up of silver halide crystals. These tiny crystals are light sensitive and X-rays really are just a form of light so, the crystals get excited when exposed to an X-ray.

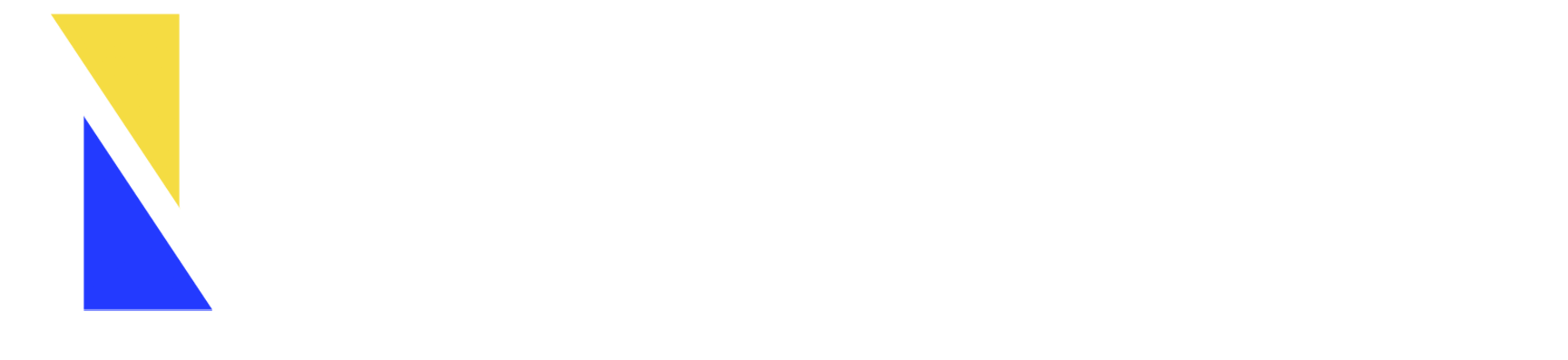

The X-rays get attenuated at varying rates based on material thicknesses and density. Less X-rays will reach the film (or crystals) when imaging a thick section of an object compared to a thin section. Similarly, less X-rays will reach the film when imaging a more dense part made of steel vs a less dense part made of aluminum. This differential absorption (difference in X-rays making their way to a silver halide crystal) creates a latent image on the film which can then be developed into a permanent photograph with chemical processing that separates the exposed crystals from the unexposed.

Film has been the standard medium for capturing radiographic images since it was first pioneered by Wilhelm Röntgen in 1895. In fact, it still has prolific use in the non-destructive testing industry today. However, these days there are other options which are becoming increasingly adopted.

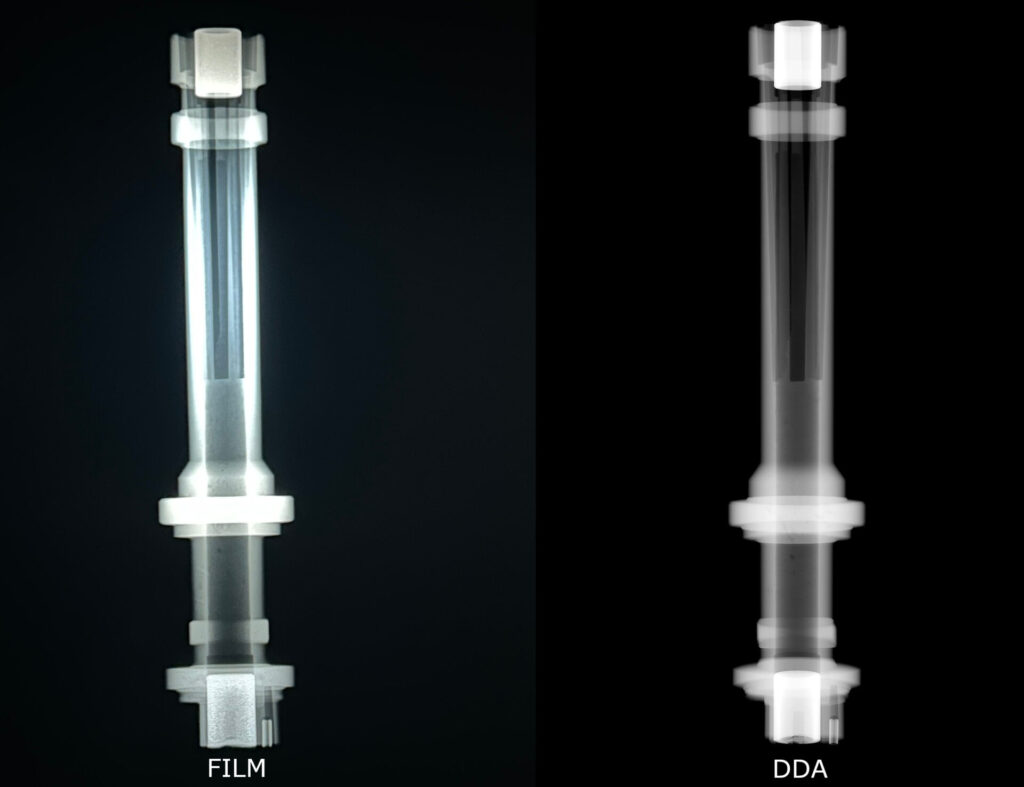

Digital detector array (DDA) technology was introduced in the last few decades and is becoming increasingly popular as the technology gets better and becomes more affordable. Rather than silver halide crystals, these detectors use a matrix of photodiodes to measure the rate of X-ray absorption. And unlike film, there is no physical radiograph. Instead the image is viewed digitally on a computer.

Film Density & Digital Image Gray Scale

With film the optical density of the radiograph cannot be adjusted after the film has been developed. An optimal density range is targeted using specific exposure settings, film type, and developing time but there is a lot that can go wrong. If the area of interest on the developed film is too dark (or too light) the exposure must be reshot. It takes time and expensive materials to expose and develop a film radiograph. This all goes to waste if a reshoot is required.

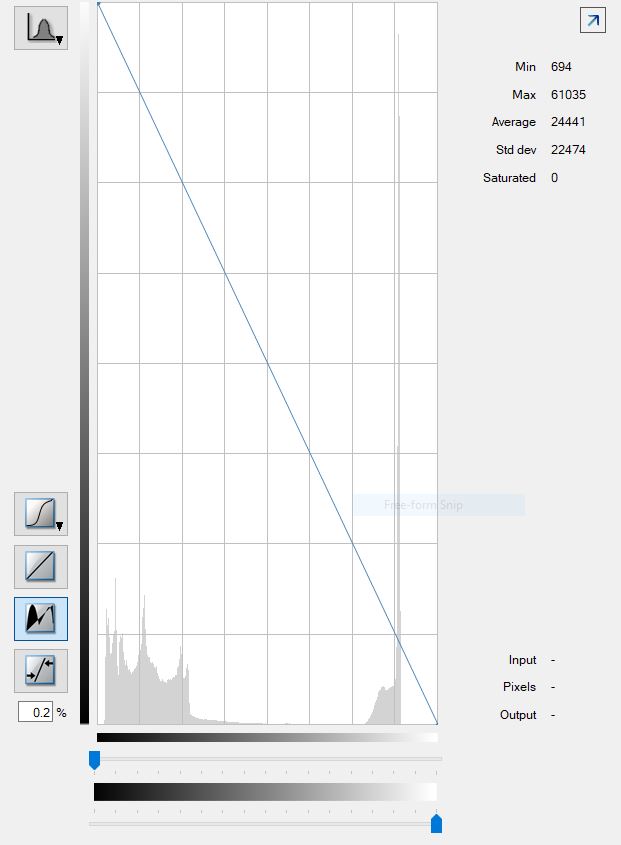

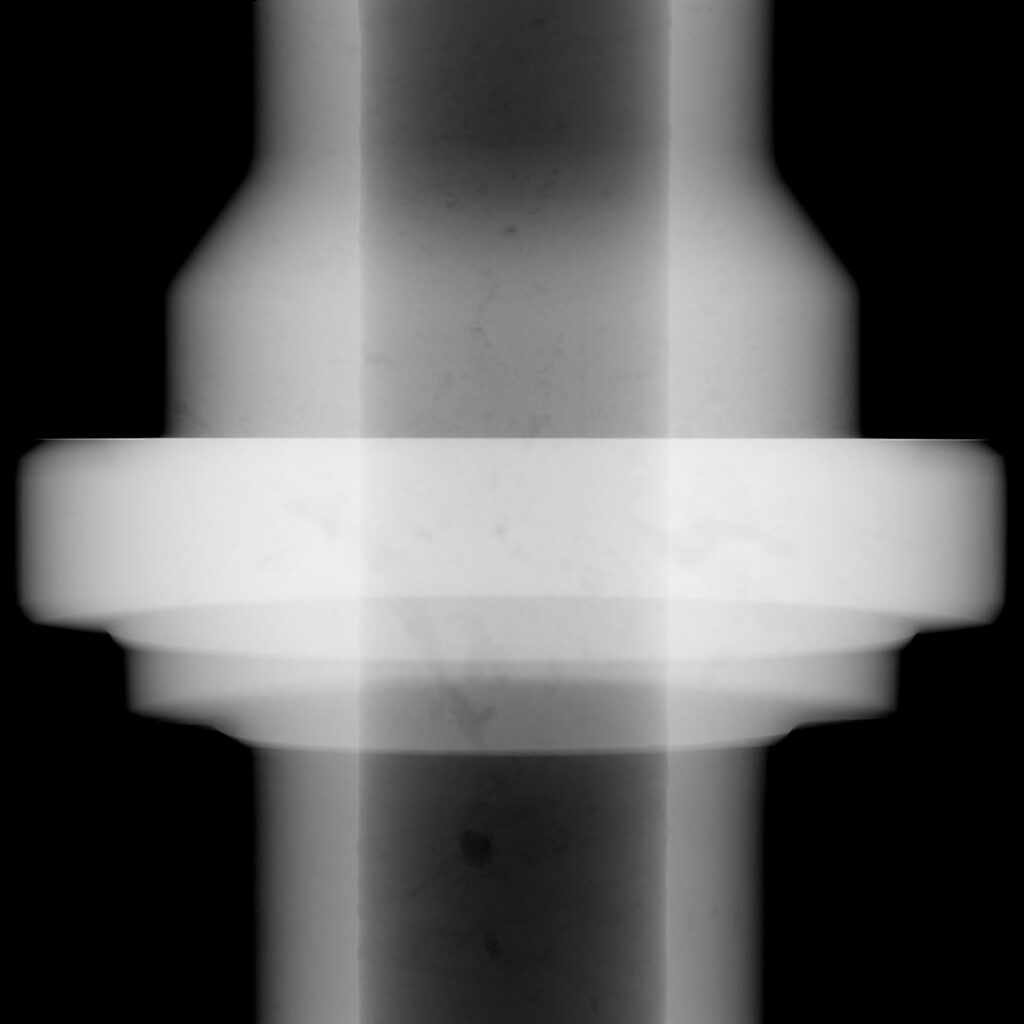

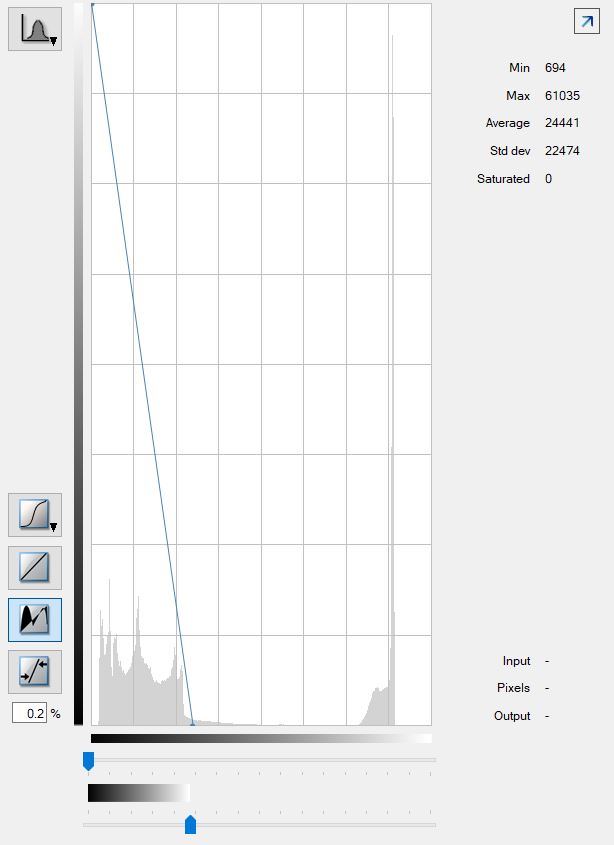

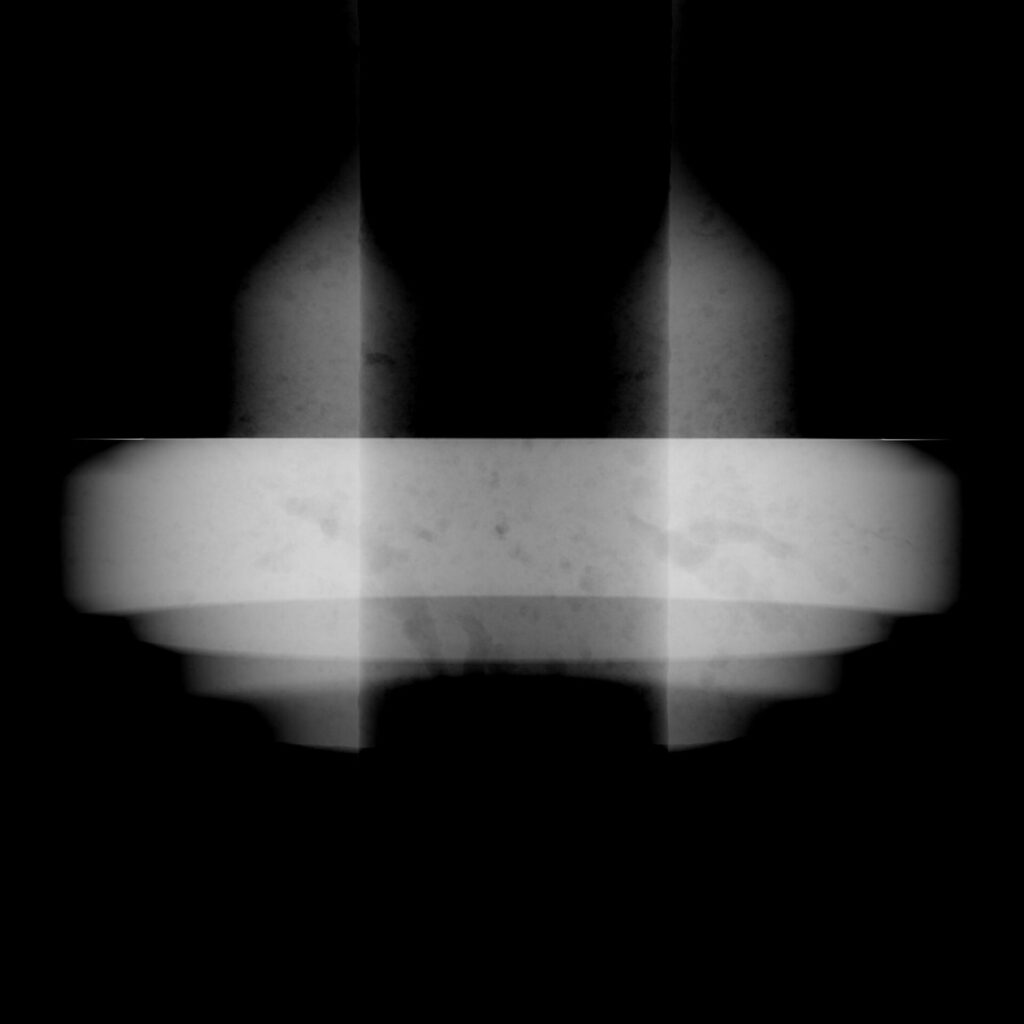

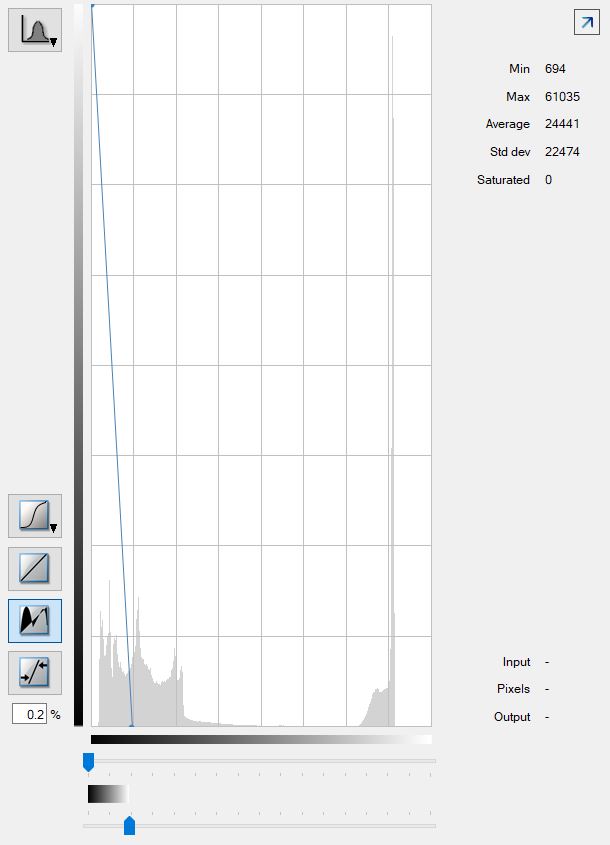

With digital imaging you get to see the results right away on your computer screen without a lengthy development time and smelly chemicals. Additionally, the brightness and contrast can be electronically adjusted which provides cushion for less than optimal exposure parameters. To put this into context, the human eye can distinguish about 500 shades of gray, but a digital radiograph taken with a 16 bit detector has 65,536 shades of gray. The 16 bit image has so much information that we cannot actually discern it all by just viewing the raw image alone. To make full use of these thousands of indistinguishable shades of gray we adjust ‘window/level’ (similar to brightness/contrast) to optimize the viewing window for a given area of interest.

Portability

One of the clear shortfalls of Digital Radiography is the need for a power source. This is not an issue in a lab environment but out in the field this means lugging around extension cables and computers. There are some battery operated DDAs out there but this does not fully remedy the power issue if operating for long periods of time or environments with extreme temperatures.

DDAs are also typically rigid and heavy. This makes them tough to set in place. If dropped the DDA could be damaged. Some manufacturers have begun to develop flexible DDAs to address this issue.

On the other hand Film Radiography is extremely pliable. It can be wrapped around a pipe. It can be cut down to size to fit into tight or oddly shaped areas. If combined with a gamma source of radiation, Film Radiography can be performed with no electrical hookups whatsoever.

Another good option for portability is Computed Radiography (CR) with photostimulable phosphor imaging plates. I can’t compare Film & DDA in the field without mentioning CR but that’s a different box of worms for another post. Just know CR can be thought of as a hybridization of Film Radiography and DDA Digital Radiography. They all have their use cases.

Automation & AI

With Film Radiography every piece of film is single use. Once the silver halide crystals are exposed they cannot be reverted back to their original state. The film must either be developed or discarded. This make Film Radiography inherently impractical for automating inspections.

Some time can be saved by using custom built fixtures or an automatic processor for the development process, but in general the image acquisition is a very manual and time consuming process.

Also, because Film Radiography is an analog process with a physical media for storing data; analysis of data cannot be aided with today’s AI computer vision models.

In contrast with Digital Radiography, a DDA is reusable and can be integrated with PLCs to develop image acquisition programs which are fully automated and repeatable. This greatly saves time and cost of consumable materials when compared to Film Radiography.

These images can also be sent directly to computer vision models for analysis with AI. This is a capability that some NDT manufacturers are investing heavily into at the moment.